Abstract

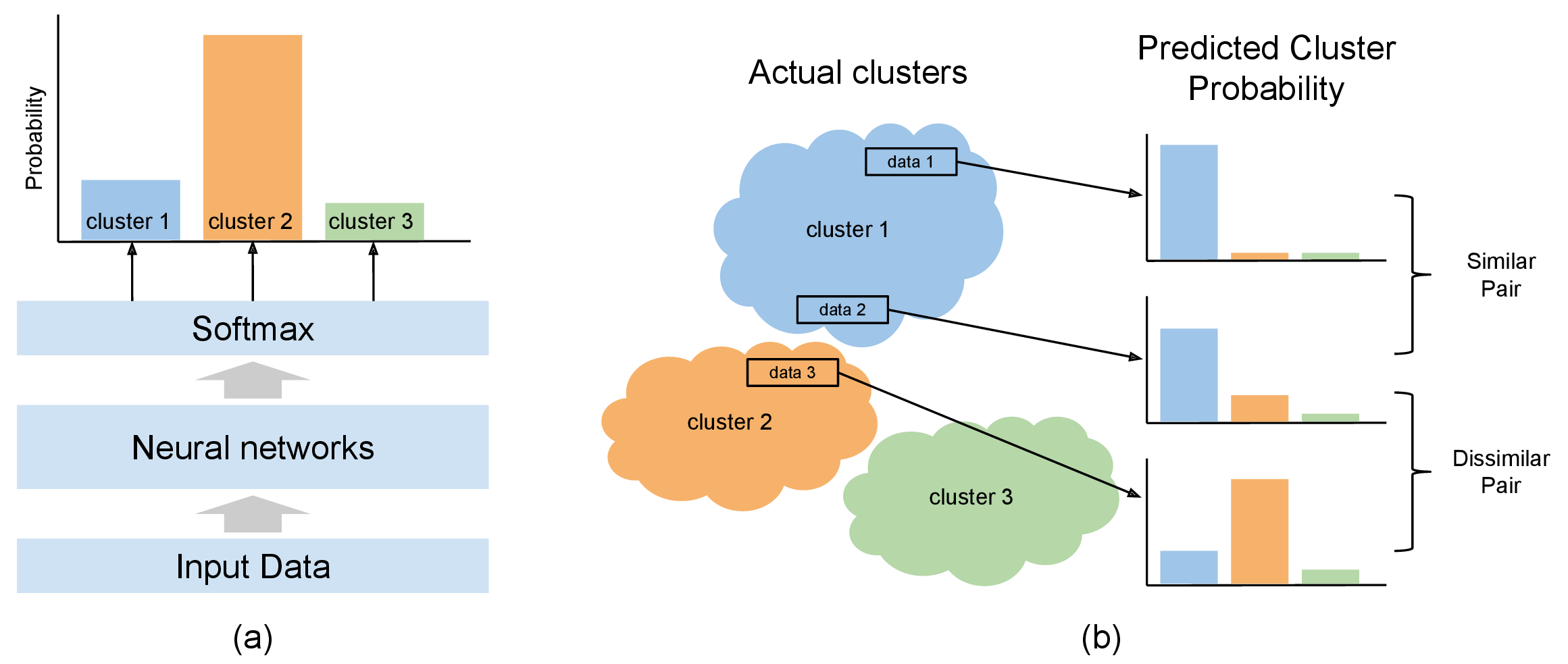

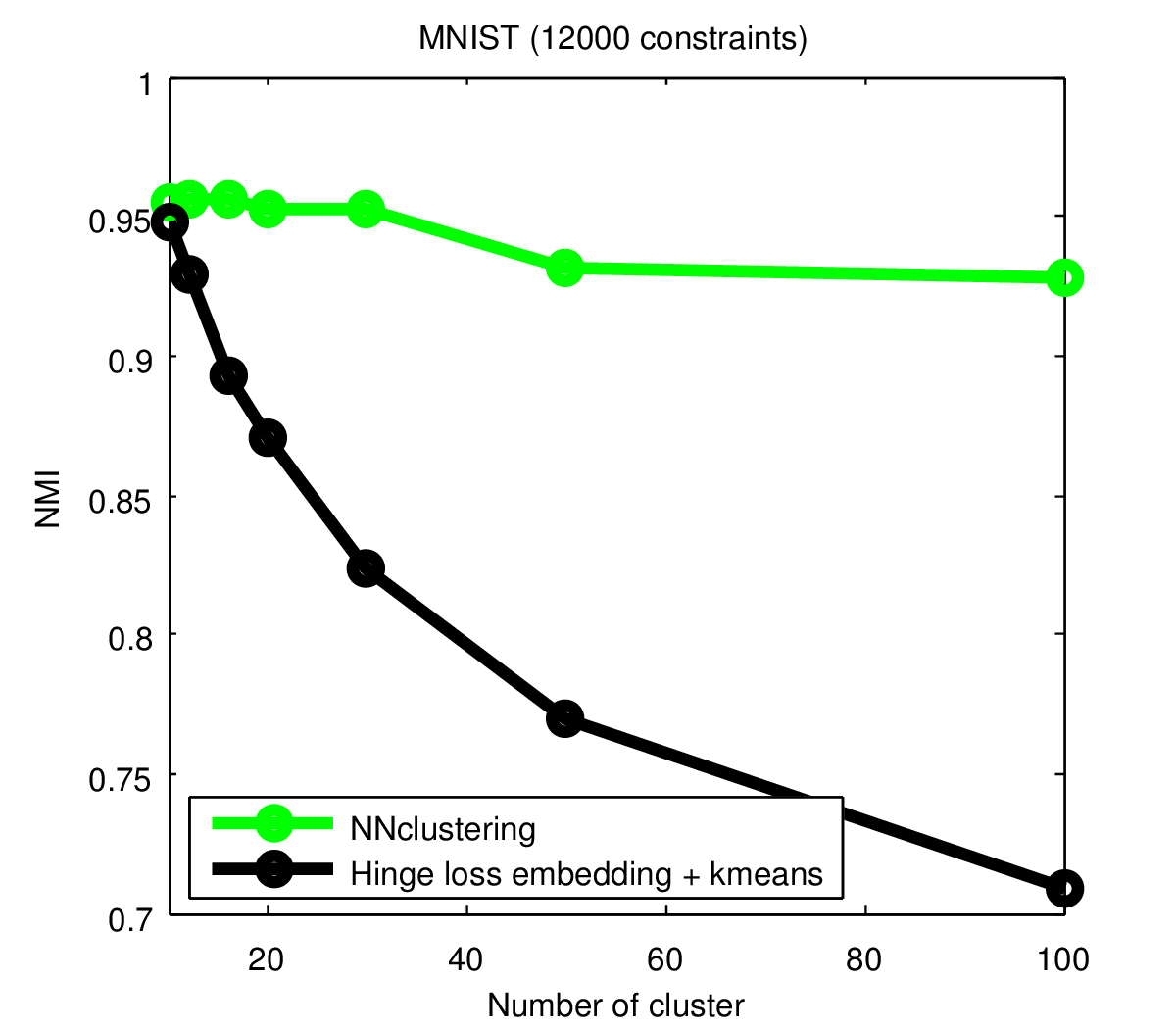

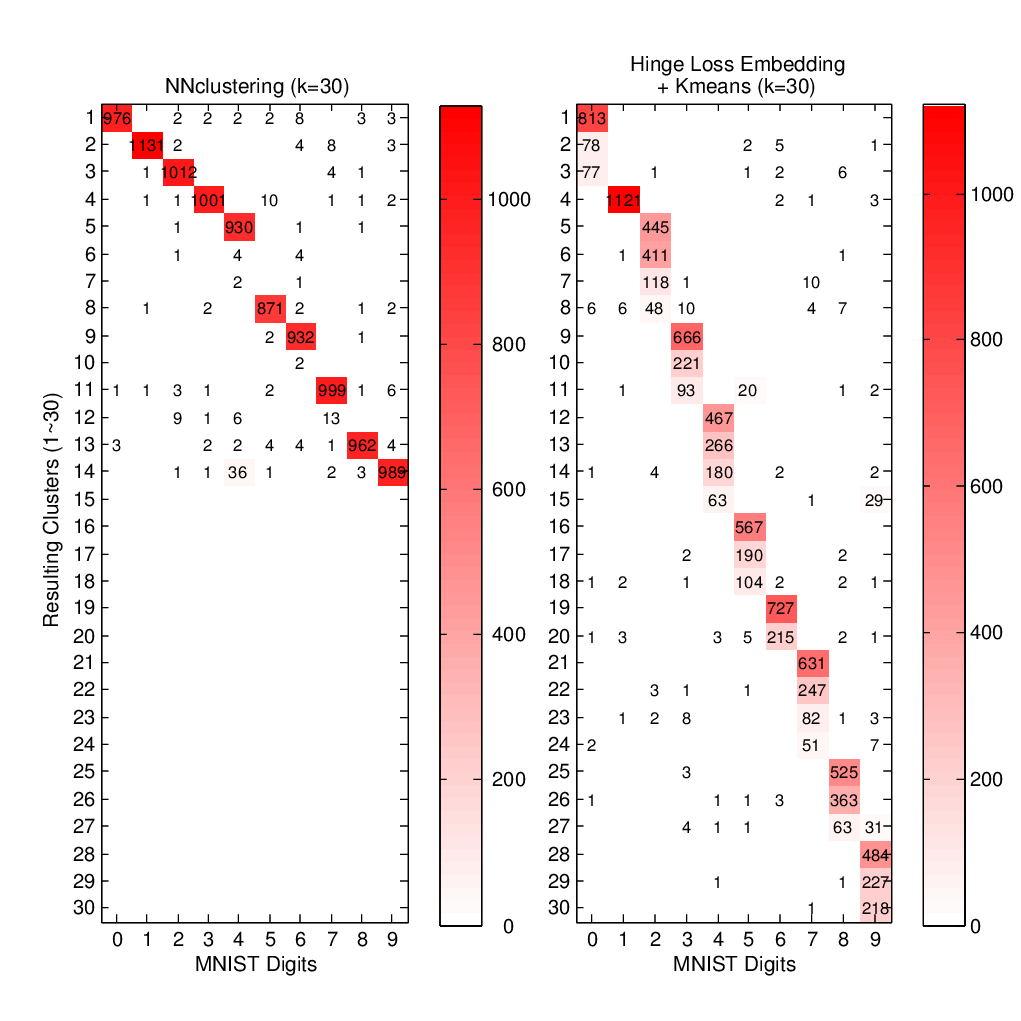

This paper presents a neural network-based end-to-end clustering framework. We design a novel strategy to utilize the contrastive criteria for pushing data-forming clusters directly from raw data, in addition to learning a feature embedding suitable for such clustering. The network is trained with weak labels, specifically partial pairwise relationships between data instances. The cluster assignments and their probabilities are then obtained at the output layer by feed-forwarding the data. The framework has the interesting characteristic that no cluster centers need to be explicitly specified, thus the resulting cluster distribution is purely data-driven and no distance metrics need to be predefined. The experiments show that the proposed approach beats the conventional two-stage method (feature embedding with k-means) by a significant margin. It also compares favorably to the performance of the standard cross entropy loss for classification. Robustness analysis also shows that the method is largely insensitive to the number of clusters. Specifically, we show that the number of dominant clusters is close to the true number of clusters even when a large k is used for clustering.

Results

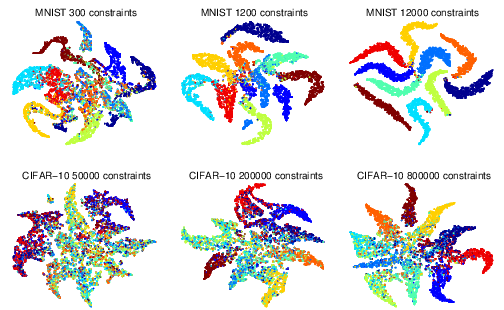

Clustering with Partial Constraints

Clustering with Unknown number of clusters

Bibtex

@article{hsu2016nnclustering,

title={Neural network-based clustering using pairwise constraints},

author={Yen-Chang Hsu, Zsolt Kira},

journal={ICLR Workshop},

year={2016}

}

Acknowledgments

This work was supported by the National Science Foundation and National Robotics Initiative (grant # IIS-1426998).